Digital Feature: So you’ve rolled out new software. How do you make it stick?

A. SULLIVAN, Hexagon, Chattanooga, Tennessee

It is a great feeling when a software project ends – the system is installed, the users are trained and adoption seems to be trending up. Everyone is happy and among the project champions and implementers, there are high-fives all around.

Cut to nine months later; the team is being trained in some new process, and the solution that was implemented all those months ago has lost the buzz that it had when it was new – and worse, usage of the system, which was good initially, seems to be trending down. Unfortunately, all the reasons why the system was originally needed still exist, but the ROI that was the driving force behind the project is not being realized if people are not utilizing the tools the project provided.

This is a common situation, and as vendors of enterprise software solutions, we here at Hexagon may see it more than most. So, what do companies do? What works?

Unfortunately, what does not work in the long term is simply “cracking the whip.” While a management memo to “just do it” (use the system) may generate a brief improvement, it rarely lasts; it is nothing personal, it is just human nature. People resist change, and in a busy environment (which we all share), people prioritize those activities that seem to matter most (to supervisors, peers and the organization at large). Even when a change is well explained, and everyone buys in and agrees on the benefits, if your workforce – who initially embraced the system – doesn’t receive any feedback or reinforcement, it is all too easy for them to subconsciously de-prioritize the new process(es), and fall back on older, more ingrained habits. And so, the solution that everyone once loved now risks being relegated to your company’s pile of under-utilized, forgotten software.

A few months ago, a customer came to us asking for our recommendations for adopting best practices. They had implemented Hexagon’s j5 solution over a year ago, which included software tools for operational processes such as operator shift logging, shift-to-shift handover and e-permitting. Now they were seeing indications that their users were not documenting their handovers, even though a year ago, everyone was on board, and the new system made it relatively painless.

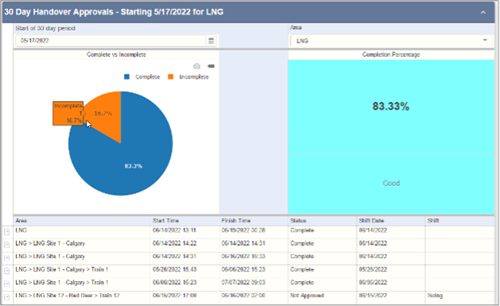

In this case, we recommended that the first step be to institute metrics. Often these types of issues are based on anecdotes or intuition, with little actual data. So, a good first step is to collect empirical information to 1) verify that there is an issue and 2) establish a baseline so that the impact of any improvement efforts can be measured. In this case, we recommended something like the dashboard below, which allowed the management team to keep track of which teams were completing handovers and which ones were not.

An additional advantage of this dashboard was its ability to allow reviewers to quickly open any handover that was part of the report to see what team members were involved and whether there was some extenuating circumstance that may have justified skipping the approval step (such as an urgent issue in the plant).

Often the act of measuring the relevant KPIs (and making the results generally visible) is enough to improve system usage. After all, what gets measured gets done, and sometimes a dashboard provides just the reinforcement needed. The jury is still out on whether this step resolves the issue for this customer, but if not, then at least they have a clearer idea of how serious the issue might be.

In this case, because it was j5, other options could be leveraged to increase the visibility and accountability around handover completion. Recommendations included adjusting supervisor handovers to include a view of their subordinate’s handover statuses. They could also set up the system to send supervisors an email as each of their team’s handovers were completed. As a last resort, operator handovers could be adjusted to require supervisor approval, which can be highly effective, even though it should not be necessary in a perfect world.

For this customer, we had a few more recommendations in case the data did show that this was a real issue and publicizing (the lack of) user performance didn’t lead to a resolution. No software can be effective or useful if users are actively subverting it, but this is rarely the case. What we have seen is that the vast majority of the time, the end users of software their company implements are interested in doing their jobs well and according to the direction they’ve been given, if they can. In this shift handover example, if users still weren’t using the software, then there was probably an actual cause – and if the cause could be identified, there was a good chance that it could be corrected (or at least mitigated).

As a potential follow-up, we recommended a workshop or focus group with some or all of the affected users to discuss the questions provided below:

- What are they doing for handover (instead of using j5)? Are they not doing it at all (!?!), or do they have some other process they prefer? If they do have some other process – then what are the differences? Does it take less time, not require a computer, etc.?

- Are there obstacles preventing them (the end-users) from using the process as designed? Sometimes the turnover window is too short or at the wrong time – maybe users do not have access to a computer at the end of their shift.

- Is there some characteristic of the handover configurations or behavior in the system that annoys them so much that they just don’t want to use it? Could be that users think the handovers are unreasonably long, asks questions they cannot easily answer or don’t include the things they think are the important things.

N.B.: These kinds of questions can be tricky – and it is very important in this kind of data gathering to focus on the process – not to assign blame, either to those not using the process or those who may have designed it in the first place. To be effective, the underlying, overriding assumption must be that the entire team is, in fact, a team – and that the goal is to find the best way to get the job done.

Hope this article was useful, or at least got you thinking. If you enjoyed this one, please be sure to read the next in this series, What goes up must come down: Understanding fluctuating oil and gas demand.

About the author

Alan Sullivan is a Senior Industry Consultant with Hexagon's Asset Lifecycle Intelligence division. With over 30 years as a software consultant specializing in plant-floor applications, Alan specializes in gathering requirements and working with large companies to design and implement software solutions to help their facilities address challenges and improve operations. In the last 20 years Alan has led projects and delivered successful solutions for clients in LNG, automotive manufacturing, nuclear power generation and other industries. Part of Hexagon’s pre-sales team focused on Operations and Maintenance, Alan works with owner operators to discuss their business processes, requirements and challenges – and how Hexagon solutions might help.